Reading: People, Processes, and Managing Data

PEOPLE, PROCESSES AND MANAGING DATA SECOND EDITION

Gerald W. McLaughlin Richard D. Howard

with

Lore Balkan Cunningham Elizabeth W. Blythe Ernest Payne

THE ASSOCIATION FOR INSTITUTIONAL RESEARCH

Number Fifteen Resources in institutional Research

INTRODUCTION

Knowledge Management is the current "in-thing." During the past 20 or so years, Data Management evolved into Information Management (remember IMS and MIS?) and Information Management evolved into Knowledge Management. As you will see, we fully support the concept that knowledge should be managed and include discussions about processes that facilitate the use of information to influence decision making. We object however to the claim by some that Knowledge Management is more important than Information Management or Data Management. As you see in our discussions, all three are key elements to quality decision support. The purpose of People, Processes, and Managing Data is to provide a conceptual and practical framework for creating a quality foundation of data that is necessary for the creation of quality information, quality knowledge, and their management in our colleges.

About 20 years ago, several of us working in institutional research positions were active in both AIR and CAUSE (now EDUCAUSE). In our conversations, we realized that while we did not get into our jobs to manage institutional data, without structured processes for managing data, we could not do our jobs of providing quality decision support information to the leaders on our campuses. Without sufficient quality data our efforts in providing quality decision support and planning information would be, at best, minimal and at worst misleading and destructive. As we developed workshops and papers on topics related to managing data it became clear that technologies (hardware and software) were important tools, but not solutions to the challenges of creating quality institutional data. This reality continues to surface as we talk with colleagues about barriers that limited their ability to have data of sufficient quality to do our jobs. As a result, over the years we talked about and worked on products such as data dictionaries and administrative data bases and worked with tools like data warehouses and desk-top computers. And, the outcomes of these discussions and work were the basis of the first edition of People, Processes, and Managing Data.

As we look back over the past six years, a great deal has changed.

Desktop computing, interfacing with client-servers are now the norm. ERP (Enterprise Resource Planning) systems are being integrated with specialized systems. With integrated and relational data structures these enterprise systems provide the opportunity to build CRP's (Customer Resource Planning) and such. ETL (Extract, Transform, and Load) tools and CASE-type tools give drag-and-drop capability for managing and analyzing data. Data Marts, Data Cutting Tools, and web sites such as IPEDS PAS (Peer Analysis System) and CUPA-HR DOD (Data on Demand) are changing the way we do business. Similarly, analytical tools are available such as OLAP (On-Line Analytical Processing), Data Mining, and statistical software systems. These increased capacities to use data and enhanced ability to distribute data and information to a

iii

much broader range of users both on and off campus have resulted in greater expectations for quality data. Our understandings of - and the conclusions we draw about - our institutions based on our analytical research still depends on the quality of data in our management and reporting systems. One thing however has not changed: software systems designed to support operational systems provide little support in the development of management and reporting systems.

The problems of poor data have not been directly addressed in the evolution of data management software and hardware. Because challenges to data quality continue to exist on virtually all campuses, we continue to offer a module that addresses data management at the AIR Foundations Institute. And, while much of what was published in our earlier volume is relevant to the successful development and management of management and reporting systems, technology has changed and our understanding of data management has become more refined. As such, it seemed appropriate to update People, Processes, and Managing Data, incorporating our latest thinking and practices about managing data and describing the use of some of the information technology that has become available during the past six years.

Over the past six - seven years, the four of us have continued to be involved in data management activities. Betsy and Lore have worked on the creation and operation of a data warehouse and while much of the work they did is reflected in the first edition, they are pursuing other information technology activities at present. Rich has become a Professor and now teaches both colleagues and graduate students about the complexities of institutional research, the processes of decision support, and the use of data in the creation of information to support institutional planning and management. Gerry has returned to some of the basics of managing data, chairing a Management Information Group, co-chairing an effort on standardizing student data, and helping to establish a data element dictionary both for attribute and entity data.

Because of the value of understanding the steps one might take to produce a managed data structure, we are grateful to Ernie Payne from the University of Arizona for his description of the UA data warehouses and for sharing the strategies and lessens learned during the development of their operational and administrative data warehouses over the past 15 years.

Much has changed - much has not changed. However, the basis for successful institutional research remains dependent on People, Processes, and Managing Data.

Gerry Mclaughlin Rich Howard

iv

FOREWORD

All data is meta to something.-David McGoveran (from a tag line on the Internet)

Welcome to our discussion on managing data. Why are we discussing data management? The answer is simple: We all make decisions and often wish we had better data and information to make more intelligent decisions. We firmly believe that at the core of a "quality" institutional research function is the ability to add value to facts in, on, and about the institution. We also believe that if we, as a profession, can develop a structured management process, which adds value to the data in our institutions, then we have identified a core methodology for institutional research.

As new technologies have become increasingly core to the management of our institutions, it has become painfully clear that technology or more complex software does not solve the problem of poor data. The value of data in support of decision making will only improve when the data are strategically managed. There are key systematic, sequential activities which must be performed to produce usable data; and, the foundation of institutional research starts with usable (or quality) data. It is these systematic, sequential, and significant activities which are identified and described in this monograph.

Using Peter Senge's (1990) notions of organizational learning as a backbone, the discussion in Chapter 1 describes the decision-making environment and data use typically found at our colleges and universities. In Chapter 2, a sequential five-function model is described which takes basic data and transforms them into information designed to increase intelligence in the decision-making process. These functions require various activities, tasks, and events, which are described in Chapters 4, 5, and 6. In each of these chapters, a specific role in the data management process is described. In Chapter 4, we describe the Custodian who supplies the data. In Chapter 5, we describe the Broker who restructures the data into information. In Chapter 6, we describe the Manager who supports increased knowledge and accountability across the institution by using data-based information in decision making.

Structured institutional data management and data-based decision making will, at most institutions, require a change in the way decision making occurs. In Chapter 7, we discuss some of the integral issues and barriers to change which you may experience and we seek to explain how you might deal with some of the individuals on your campus who may not be ready to embrace data-based decision making.

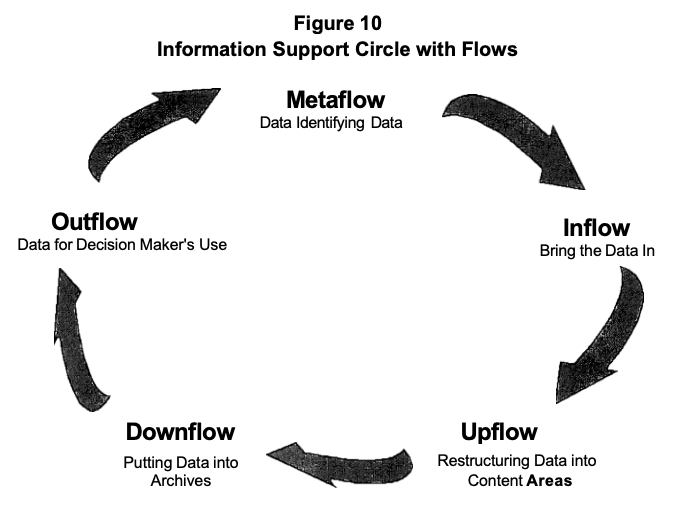

Throughout the monograph, frequent problems with data management are identified and some probable causes and possible cures are discussed. The detailed steps in the management of data and the corresponding checklists are designed to make institutional data and information a strategic institutional asset. Our conceptual model, the Information Support Circle, guided the development of the data management processes outlined in this monograph.

V

The Information Support Circle was adapted from the scientific method we learned in high school chemistry. Ideas are identified, unknowns are established, beliefs identify testable hypotheses, data are collected and analyzed, interpretations are developed, and conclusions are presented and focused on a new set of relevant unknowns. This monograph follows a parallel process designed to add value to institutional data and information.

While the monograph is based on this conceptual model, the work has been developed from a very pragmatic and applied perspective. We have a combination of more than half a century of experience (unevenly distributed) in institutional research. The issues described became important to us from a basic desire for survival in our institutions. Our organizational responsibilities have required that we apply various methodologies to support the management and planning of higher education. These initiatives were neither relevant nor rewarded if the data were unusable in the decision making or planning processes we were charged to support.

We started thinking seriously about the concepts of data quality some 12 years ago, writing articles, presenting papers, and conducting workshops on the topic. In writing this monograph, we have used much of the material developed in preparation for these activities, modified as we have learned. The following ideas represent a much clearer vision of how we think the management of data works than when we started thinking through these issues more than a decade ago.

The flow of ideas in this monograph is designed to create a logical focus on strategies, which involve the entire organization in data quality improvement processes. To this end, we guide the reader through a sequence of:

1. thinking about the management and use of data;

2. considering the problems which may exist in providing needed information support;

3. describing the steps to create stable, consistent, and documented data;

4. describing the steps in brokering the standardized data into an institutional asset;

5. describing the steps in moving the data into production and use; and,

6. considering the processes needed to support the continual improvement of data quality and information available to decision makers.

The ideas presented in this monograph are our beliefs, refined by applying the Information Support Circle to support systematic problem solving and decision making. We do not represent our ideas as the only

way to manage and improve data, but as food for thought, hoping that we can all continue to learn. Bon Appetit!

About the Authors

Gerald W. Mclaughlin currently is Director of the Office of Institutional Planning and Research DePaul University where he has worked since 1999. Formerly he was Director of Institutional Research and Planning analysis at Virginia Tech. He is active in the Association for Institutional Research (AIR) and has presented papers and workshops on managing data and decision support in IR to include presentations at the Foundations for the Practice of Institutional Research Institute. He has served as AIR President, Forum Chair and as President and Chair of the annual meeting for the Southern AIR. He has also chaired the AIR Publications Committee, edits IR Applications and the AIR Professional File, and worked with the CAUSE/EFFECT editorial committee for EDUCAUSE (formerly CAUSE). His current interests include analytical methodology and decision support.

Richard D. Howard is currently a Professor of Higher Education at Montana State University-Bozeman. Before taking this position he served as Director of Institutional Research at West Virginia University, North Carolina State University and the University of Arizona. He is active in AIR, currently serving as Editor of the Resources in Institutional Research book series, has presented more than 50 papers and workshops on managing data and decision support in IR, and serves on the Foundations for the Practice of Institutional Research Institute faculty. He has served as President and as Forum Chair for AIR and as President of SAIR. He has also chaired the Professional Development Services Committee for AIR and SCUP, and served on the CAUSE/EFFECT editorial committee for EDUCAUSE (formerly CAUSE). His current interests include the creation and communication of data and information to support planning and decision making, and the development of graduate programs to train institutional research professionals.

Lore Balkan Cunningham has worked in the area of data management for 25 years. She recently retired from her position as Data Administrator and Assistant Director of Information Warehousing and Access at Virginia Tech. Prior to this position, Lore worked on several special data quality projects in the Office of Institutional Research at Virginia Tech. Lore has been a frequent presenter at the EDUCAUSE and CUMREC conferences and co-chaired the EDUCAUSE Decision Support Constituency group. She co-authored several articles in the CAUSE/EFFECT magazine and served for three years on the CAUSE/ EFFECT editorial committee. Lore previously served on the national board of the Association for Information Technology Professionals (formerly DPMA).

Elizabeth W. Blythe is currently Project Director of the Information

vii

Warehouse and Access project at Virginia Tech. She has worked in Information Systems for more than 30 years as a developer, a systems engineer, and a manager. Prior to her current position, she worked in the Office of Institutional Research at Virginia Tech for a year. She has conducted several workshops at SAIR. Her current area of interest is in providing easy user access to data through the Web - managing data, and transforming it into information.

Ernest Payne retired in 2004 after 38 years at the University of Arizona working in large data center operations, software, planing, financial management, administrative systems, databases, and data warehousing. The last 12 years he was the principal architect and project lead for the University of Arizona's Integrated Information Warehouse providing web based and direct access to current and historical data on all facets of the institution to senior administrators, deans, and the people they turn to for information.

viii

CHAPTER 1 INFORMATION AND THE ORGANIZATION

The second requirement of knowledge-based innovation is a clear focus on the strategic position. It cannot be introduced tentatively. The fact that the introduction of the innovation creates excitement, and attracts a host of others, means that the innovator has to be right the first time. He is unlikely to get a second chance. (Drucker, p. 117)

In all sectors of our society and economy, information is used to reduce the level of uncertainty in decision making. It is both an intuitive and methodical process by which more knowledge about the environment is accumulated. Our educational institutions are no exception. Higher education is facing serious management challenges as institutions cope with rapidly changing technology, a fluctuating economy, and increasing demands to produce more with less. These challenges change the way decisions are made, what data are useful, and what data are necessary. All organizations are challenged to create an environment where relevant and usable information can be accessed when needed by both employees and management. This has recently worked its way to a fascination with the Balanced Score Card, Performance Indicators, and a multitude of web based products, often under the rubric of "knowledge management" (Tiwana, 2002).

Making Data Usable

The effectiveness of institutional research in supporting an institution's decision making depends heavily on the availability of usable data. Usable implies that the data are sufficiently accurate, timely, and collected systematically. The institutional research function is often called upon to provide data or create usable information to depict history, describe the current status, and anticipate the future. There are natural barriers which limit the value of data and information.

Data problems are mentioned most often as the major barrier limiting the effectiveness of institutional research and, as such, planning and decision making. Unfortunately, there is often a lack of understanding about the way that the data should be used, skepticism about the validity of the data, distrust because of obvious data defects or errors, lack of access to the data, and often only lukewarm sustained management support for remedying these problems.

The most common factors which limit data quality and which have been identified through the years at professional gatherings of institutional research professionals include:

Consistency of data definitions. Limiting factors here include no agreement on definitions; incorrect interpretations; data collected in varying forms across campus and across institutions; and, lack of adequate comprehensive measures.

Technology. Obstacles here are unsophisticated computer programs; lack of tools to maintain, transfer and analyze data; poor data collection processes; lack of data management tools; and, lack of ability to support distributed decision making. New computer-based operating and management systems have multiplied in the past decade and in many instances these obstacles have become more evident on our campuses.

Data Access. Problems in data access include the inability to access data, and limited user knowledge about what data exist and where to obtain the data.

The improvement of data is critical to the success of the institutional research function's ability to add value to our institutions planning and decision making, and is closely associated with many of our traditional functions. Years of experience tell us that there is no single best way to deal with the need to improve data quality. There are, however, some strategies which are more likely to be successful. In total, these strategies amount to creating a data management culture across the organization.

While the data do not need to be perfect, they do need to be good enough to meet the needs of the institution. To achieve appropriate data quality, an organization first needs to understand itself. The discussion of managing data needs to start with an understanding of common problems that plague our organizations. All organizations are unique, but they do have some common characteristics. The stage for using data must be set in terms of the organization's ability to learn. As noted earlier, the use of data is a learning process. The ability to manage the information infrastructure is a learned process, and institutional research is a process of assimilating information to support institutional learning. And, as stated earlier, increased knowledge and understanding reduces uncertainty in decision making.

Disabilities in Learning to Improve

Peter Senge, in his book The Fifth Discipline, provides two views of organizations. One view examines the learning disabilities, which can exist in an organization. The second view presents the organization as a learning organization, which uses the knowledge it acquires to continually improve. Senge's organizational learning disabilities (discussed below) are extremely appropriate when applied to the challenges we face when trying to use data to support decision making.

I am my position. People in the institution focus only on their tasks and have little concern for how this affects other people. Data flows across numerous desks and functional lines in the organization. The registrar who only works to clean data for registrar functions will never be a source of usable student data for other needs. The vice president who only wants clean summaries will never be the source of high quality detail data. The department head, who needs restructured data based at the program level, has little reason to input better data into a faculty timetable system.

2

The enemy is out there. Each of us has a tendency to blame people outside our immediate unit or department for organizational problems. Because data must flow across organizational lines, blaming others for data problems is natural. If systems are put in place that allow and expect blame, then data will always be a strong candidate for fault. Since perfect data only exist as a fantasy, the means, motive, and opportunity exist for blaming others for faults in the data, and for abdicating responsibility. The concern is about threats, not weaknesses.

The illusion of taking charge. Many of our reward systems require the leader get in front and do something. This is particularly expected when an obvious problem exists. Every so often, there will be a glaring problem with the data resulting in situations such as people who are deceased being invited to the president's reception, some receiving several invitations, or alumni, who have graduated, being contacted and asked why they are no longer enrolled. "Take-charge" leadership to quickly resolve such problems can be disastrous when the take-charge person does not understand complex technical processes. While symptoms are addressed, the underlying problems remain, with opportunities for real improvement displaced by hostilities directed at shortsighted, reactionary solutions.

The fixation on events. As a continuation of the take-charge process, the leader is prone to focus on events rather than results. Data management is a process that tends to focus on improving ineffective structures and events. As such, it is very difficult to maintain the support of a sponsor or senior manager while continuously focusing on errors.

The presence of good information is difficult to demonstrate as an event because it is simply assumed or expected. Furthermore, data improvement does not lend itself to being an exciting key performance indicator.

The parable of the boiled frog. If you throw a frog into boiling water, it will jump out. This, of course, makes some assumptions about the frog, the water, and the pot. If, however, the frog is placed in warm water, as the water is slowly brought to boiling, the frog will not hop out (at least this is what the book says}. The availability of detailed data has gradually attracted the increased interest and awareness of senior executives to the point where data access is sometimes an ego trip rather than the means for better decisions. Substituting "detail" for "intelligence" often occurs gradually until the data manager is confronted with the "awash in a sea of data" accusation by individuals who suddenly realize they do not "know" any more than they did in the years before the arrival of executive information systems (EIS}.

The delusion of learning from experiences. "We learn best from experience but we never directly experience the consequences of many of our most important decisions" (Senge, p. 23). Some critical decisions

3

are the hardware and software purchases made by the institution. The choice of a particular technology impacts many people other than those making the decisions, often several years after the decisions are made. While we learn from these experiences, sometimes painfully, we usually fail to apply this knowledge to the decision process. The decision process is often isolated from the experience.

The myth of the management team. Teams in organizations often tend to spend all their time fighting for turf, avoiding the hard decisions and avoiding things that make them look bad, all the while pretending to work as a cohesive team. This is strikingly similar to what has been referred to as the collegial process. For example, how many true team efforts exist between senior faculty and administrators? Yet data management requires the team effort of administrators and faculty. How many true team efforts occur between academics, facilities management, and financial administrators in a college or university? Yet these groups must combine management activities to capture, store, restructure, and deliver valid and reliable data. As we create credible data, the way decisions are made and the outcome of the decisions will change. This will ultimately shift the balance of power. Improved data is, therefore, a threat to some of the more powerful individuals at our institutions. This learning disability is the most regrettable, as it is the cumulative mechanism which allows and perhaps fosters the existence of the other disabilities.

A learning organization will not evolve naturally, but requires significant effort at all levels of the organization. The institutional research function is positioned to be an effective force in this evolution. Enhancing the value of data to create knowledge is traditionally an expectation of institutional research offices, growing out of a history of institutional research as a user, a producer, and sometimes the source of data for key institutional decisions. The institutional research function at most institutions has the relevant experience and expertise to meet the

challenge of providing or facilitating effective data management to support the learning organization.

The Learning Disciplines

With the backdrop of the organizational learning disabilities as related to data management, consider Senge's alternative for creating a learning organization that would provide the foundation for meeting the data management challenges. The following are antidotes and vaccines for the learning disabilities. They provide a way to either eliminate the learning disabilities, or reduce their impact on the organization. The examples provided with each discipline demonstrate sound data management as an integral part of the foundation for organizational change.

Personal Mastery. Personal mastery is founded on personal competence and skills, and extends to an awareness of the opportunities

4

to evolve one's life as a creative work. Mastery requires applying an understanding of current reality to the shaping of one's future. The strategy used in writing this monograph was to focus on the mastery of skills in areas most appropriate to individuals working with institutional data. We hope this will help to identify and guide in the development of skills necessary to provide data and information as one component of a credible and stable decision-making infrastructure.

Those who would help or lead others are advised by Senge: "The core leadership strategy is simple: be a model. Commit yourself to your own personal mastery. Talking about personal mastery may open people's minds somewhat, but actions always speak louder than words. There's nothing more powerful you can do to encourage others in their quest for personal mastery than to be serious in your own quest'' (Senge, p. 173).

Mental Models. ."..the discipline of managing mental models surfacing, testing, and improving our internal pictures of how the world works-promises to be a major breakthrough for building learning organizations" (Senge, p. 174). This monograph supports the development and use of mental models by presenting the process of managing data as a conceptual model having five functions, three roles, and two properties. We develop the five functions in Chapter 3 and the three roles in Chapters 4, 5, and 6. Finally, in Chapter 7, we discuss the two properties of the model.

We believe that the development of a mental model is itself one of the integral parts of successful data management. The refinement of this model comes after the use of data to influence a situation, frequently by a decision being made. It is when a decision is made that we see the value of structuring data to create information which is finally transformed into organizational intelligence and knowledge. However, because our model is circular, the process is iterative. It is always necessary to review the process of creating the information and the usefulness of the information to the decision maker. Our model supports the learning organization precisely because it represents a process of continuous reduction of uncertainty and improvement.

Shared Vision. Shared vision occurs when multiple individuals have a deep commitment to a commonly held purpose. Individuals are bound together by shared aspirations. The best shared visions reflect, and extend, the visions of individuals. As described in Chapter 2, our vision for data management is simple: Quality in our organizations must be supported by quality in our data. This vision, as represented by our circular model, is quality through knowledge and knowledge from learning. The best management is a process by which adjustments and key decisions are supported in an intelligence-rich environment in which the focus is on continued learning. For a vision to be accepted, it must become a shared vision. Such a shared vision is only possible if it is understood to be constantly evolving.

Team Learning. Team learning occurs when there is an alignment of the individual team members in the process of working together toward the next higher level of awareness. There are three critical dimensions. First, there is a need to think insightfully about complex issues. Second, learning is supported by innovative coordinated actions. Third, there is a need to identify and use multiple tools for data access and retrieval. The roles described in Chapters 4, 5, and 6 are intended to support the development of teams, which function across these three dimensions.

We suggest processes and activities by which teams can work to fulfill the necessary roles. Additionally, in Chapter 5, we identify and discuss various groups that can be formed to learn collaboratively and work cooperatively toward data management goals. In Chapter 7, a discussion of lessons learned and issues related to the change process, provides a background of shared experiences so that others can more quickly develop innovative coordinated actions.

Systems Thinking. The fundamental "information problem faced by managers is not too little information, but too much information. What we most need are ways to know what is important and what is not important, what variables to focus on and which to pay less attention to... and we need ways to do this which can help groups or teams develop shared understanding" (Senge, p. 129). Systems thinking involves seeing fewer parts and looking at the whole. By building on a circular model with the interlocking roles, we present a very complex process as a system.

By identifying problems and symptoms, we provide a structured way of thinking about this system and thereby simplify it. There can be no quality in the management of data unless all the parts work together and recognize their interdependence. This interdependence implies willingness to adapt and to change, as does the Information Support Circle, continually improving and evolving data to intelligence. In the final chapter, we discuss change as a systematic process.

Institutional Research and a Strategy for Change

The institutional research function can be an effective change agent in a cultural evolution, leading institutions toward becoming true learning organizations. This monograph provides a basic strategy from which institutional researchers can influence the major stakeholders in this evolution through a process of continually improving data quality, thus extending and expanding the use of management information across our institutions of higher learning.

The management of data and the ability of an organization to learn are intrinsically linked. The improvement of decision making comes from the increase of organizational intelligence. The symbiotic relationship between reduced uncertainty and organizational intelligence evidences the need for a close linkage between the learning organization and the improved management of data. The management of data, as we think of it, fits the paradigm for discussing the learning organization.

Unfortunately, the learning disabilities discussed above also fit.

6

Institutional research has an interest in the proper performance of key activities in functions which are responsible for creating planning and decision support information. It has an interest in the effective performance of the roles in a learning culture. Above all, it has an ongoing responsibility to affect change and learning by adding value to institutional data and information in our colleges and universities.

In the next chapter, we focus on describing the basis for our own learning that led to the creation of a conceptual model for achieving effective data management and information support. Chapter 2 begins with a basic view of the organization and the organizational roles that relate to information.

CHAPTER2

SOMETHING NEW: MANAGEMENT INFORMATION

The critical issue is not one of tools and systems but involvement in the quality efforts of the business units. (Radding, p. 100)

The impact of technology on our organizations is changing the way we do business. Just as the automobile changed from a luxury to a necessity, information technology is now integrated into the way we manage our institutions. An IBM advertising campaign once called for "new ideas for new challenges." This concept has three key elements relevant to people who work with information. First, there is a strong theme of change. New is the norm and change is the standard. A second theme is survival in the face of challenges. The way we do everything is subject to question because our world is changing at an ever-increasing rate. This theme acknowledges the ever-present threat that, if we fail to adjust, we will become obsolete. A third theme is less apparent, but provides the key to open new doors as we close the old. This third theme revolves around the word "ideas" to reinforce the notion that thinking and creativity are the sources of influence and change.

There is the belief that change will come from creating vision and from new ideas generated at all levels of our organizations. We ask the people at the pulse of every activity to interact and generate innovative ideas. The necessity of acquiring new skills and knowledge is accepted. The ability to learn new skills is critical. Ideas are molded into plans.

Resources are reallocated to support training on the new and improved practices and products. Learning from others comes by generalizing from their experiences and avoids the unwarranted expense of everyone learning everything anew in an organizational culture of "fail and trail" and "discover and recover."

A key organizational challenge is to make better decisions and provide better support for stakeholders. Information support must enable managers and decision makers to:

1. understand a situation and recognize the need to take action;

2. identify and rank alternatives considering resources, causality, and desirability of outcomes;

3. select an alternative, act upon it; and,

4. validate and defend the action.

The successful use of information for decision support depends on an information support structure that assures the quality and availability of relevant data that can be restructured for use by the decision makers. The old ways of providing information support do not adequately support new ways of doing business. The disappearance of middle management, the development of intelligent devices, and the refinement of strategic management, are all components of the new challenge. To meet this challenge, we need new ideas about providing information support for our organizations.

8

Organizational Changes

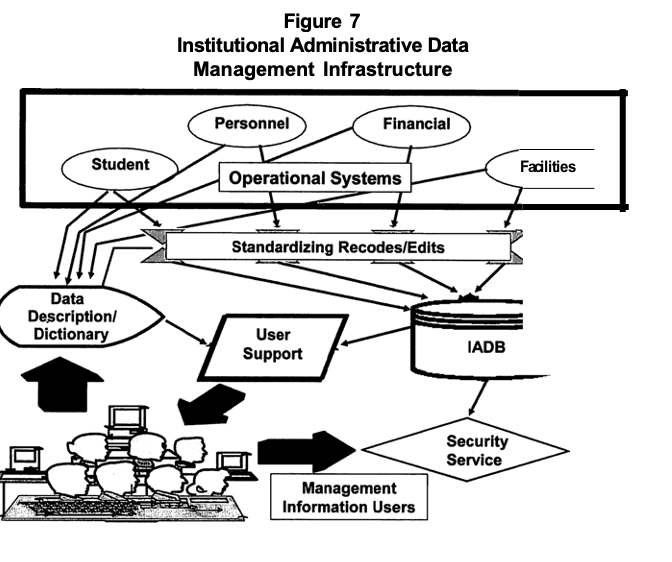

There have been rapid changes in the technology that we employ to manage data. Complementary changes are now occurring in our organizations and in our concepts about information systems. We are experiencing a simultaneous push by technology and pull by management to deliver useful information. We have traditionally only focused on maintaining data in operational or legacy systems which were organized by staff function: finance, student, personnel, etc. It is naive to assume that all required management information resides in these historical legacy systems, and that the challenge is simply to implement new technology that will deliver data on demand to an ever expanding clientele. Management's requirements are driven by new needs to perform analyses related to both long-term and short-term decisions.

The operational systems that perform day-to-day transactions were not designed to support management decision making, and probably do not contain sufficiently standardized or normalized data for the integrated, recombined, and longitudinal views required for analysis.

In response to the increasing pressures on legacy systems, existing internal and external reporting functions often use informal procedures to obtain data and interpret the variables. Often, there is not a predominance of historical files, census files, or standardized data maintained by the operating functions. In addition, there is often no formal assignment of responsibility for data management. The result is often a lack of policies to govern the processes by which those who manage operational systems capture, store, define, secure, or provide data and reports to institutional management.

Typically, when management requests for information fan out to different operational areas, each area responds by providing data from the perspective of its functional activity. Typically, this results in: (1) the executive drowning in data with no options to analyze and transform the data to useful information; (2) the executive receiving multiple, biased, and conflicting information; (3) the information being based on incomplete assumptions about the desired analysis and on data that are not integrated; and, (4) failure to properly obtain needed information produces organizational overhead, requiring the expenditure of additional resources.

Nevertheless, there are several organizational trends at many of our colleges and universities which offset the rather gloomy picture painted above and set the stage for creating a data management culture that can effectively respond to management information requirements.

The first trend is toward greater efficiency and competitiveness. With this comes a willingness to consider a variety of strategic alternatives. These strategies include changes in support structures, processes, and responsibilities. They also often produce an analysis of data needs. The second trend is the migration to new operational systems, and increasingly distributed modes of operation. New development tools, as well as off-the-

9

shelf software, include structured methodology for building an enterprise's data architecture, data definitions, and standard code usage. Since these methodologies provide the foundation for any executive information system, the migration presents the opportunity to analyze management's information requirements. The third trend is recognition that there is aneed for more participative management. (Harper, p. 10-11)

The use of participative management extends the number and also the skill-set of managers who need to access and use data from across the organization. It also changes the focus and organization of the data needed.

Decision makers often need an integrated view of multiple operational systems. This includes peripheral systems that are often not prime candidates for migration to integrated operational systems. This leads to the realization that there are ongoing data management activities that must be maintained to assure the availability of high quality information from all operational systems to support proactive decision making.

Authors in trade magazines and journals have labeled these trends as "reengineering," "right-sizing," and "total quality management," "Continuous Quality Improvement," Customer Relations Management," and then "Knowledge Management." Each term points toward improvement of the information support functions and spurs an interest in what can be done to accelerate progress. However, the lack of a structured process for coordinating various data management activities and relationships across the organization limits and jeopardizes potential momentum toward progress. Still, there remains a multitude of potential opportunities within the existing culture to leverage traditional relationships and friendships and to develop prototypes that push management's "hot buttons," thereby creating support for ongoing, quality data management work. Because organizational culture is dynamic, it is often accompanied by pressure to produce quick results that benefit the current culture. Producing relevant prototypes that address current needs increases the likelihood that quality data management projects will be included as part of other evolving organizational changes.

We can start with an understanding of the information support process, which focuses on the decision maker's needs (Table 1). We also need to define the authority and responsibilities of people who deal directly with operations and train them to respond to opportunities and

Table 1 Decision Maker's Needs

|

Needs.... |

Instead of... |

|

Tactics, techniques, and procedures |

Long-range plans |

|

Incremental changes s |

Major decision |

|

Majority of time spent on defining the problems, developing possible solutions, and monitoring results |

Majority of time spent on making a decision |

10

challenges based on the purpose of the organization, various alternative actions, and the authority inherent in the situation. Critical in this process are the acceptance and understanding of a conceptual model which includes operation functions, the process of decision support, and the use of technology which enables information flow. Equally important is the awareness that real progress depends on small incremental steps rather than large leaps.

Basic Concepts of Information Support

When our organizations were more static, information flowed on a consistent and traditional path. Management by evolution was perfected by incremental trial and error. Situational change was not anticipated and tended to evolve slowly, allowing the management culture to adapt slowly and comfortably. Today's dynamic organization needs a managed data resource whereby information flow can be adapted daily to meet current needs. We no longer have the luxury of evolving our information management any more than we have the luxury of evolving our organization. The future needs of the organization must be anticipated. Concurrently with the anticipation of new organizational structures and functions, there need to be new decision-support infrastructures. By understanding the steps in providing information and data, we can provide the required facts where the organization needs them, when the organization needs them. The old ways of changing the information support structure after the organization is changed will result in the data never catching up to a decision maker's needs.

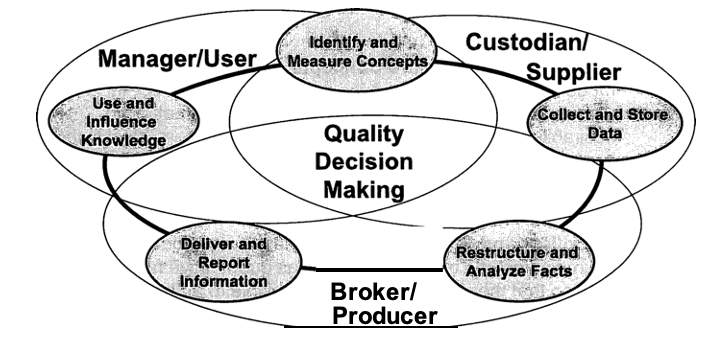

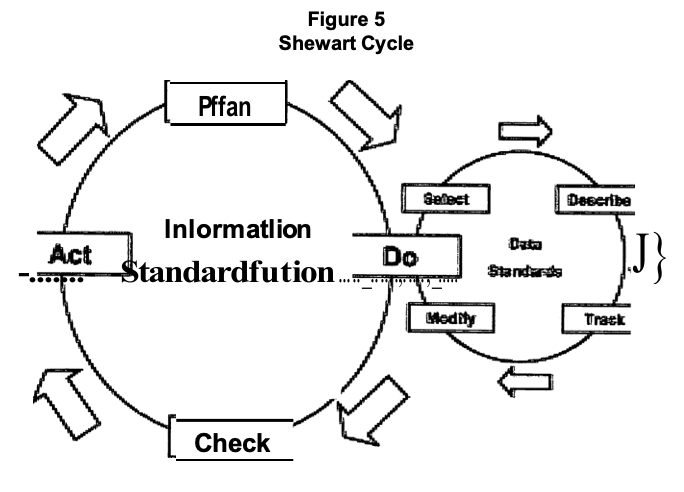

We think the Information Support Circle (Figure 1) is a useful conceptual model for creating a relevant and sufficient awareness of these issues. As a model, it admittedly is a simplification of reality. However, it provides a basis for discussion of the process of creating decision support information.

Figure 1 Information Support Circle

The Information Support Circle has five basic functions, three key roles, and two major properties. The five functions provide a basic framework for the tasks associated with helping people make informed decisions. The roles provide a structure in which specific tasks can be organized and assigned to individuals in the organization. The properties guide us in improving the quality of information provided to the decision process.

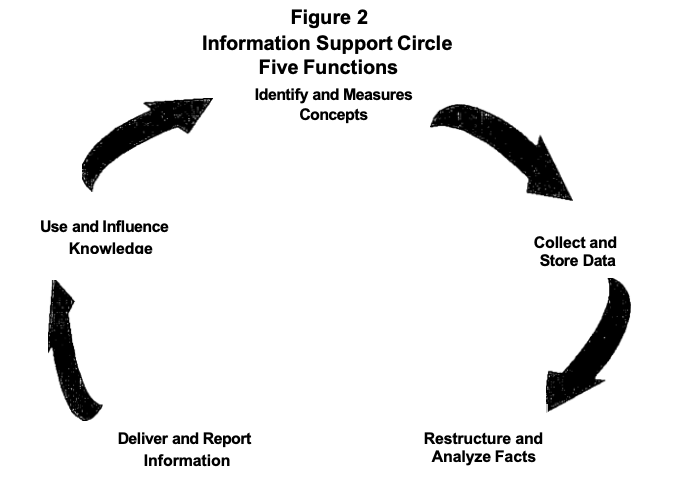

The Five Functions

The five functions provide a framework within the Information Support Circle for describing specific steps and activities (Figure 2). Each function has two sequential activities and a standard of quality. A short description of each function follows. Specific activities and quality standards are discussed in detail in Chapter 3.

1. Identify and Measure Concepts. Develop a conceptual model of the situation. Describe its major components. Include measurements which explain the feasibility of alternatives, desirability of outcomes, and availability of resources. Identify the key individuals and groups of individuals who have a stake in the process. Define the essential elements required to make the decision.

2. Collect and Store Data. Obtain data from various relevant sources. Include qualitative as well as quantitative facts. Store data so that they are secure and accessible to authorized users. Use technology where appropriate. Standardize the codes used and develop a collection of definitions and documentation. Edit

12

and audit for correctness. Document the procedure, the situation, and the process of data capture and storage.

3. Restructure and Analyze Facts. Bring the data together from the various sources. Integrate using standard merging variables. Link to qualitative facts. Analyze with appropriate statistical and deterministic procedures. Summarize and focus the data on the situation. Compare with peer groups, look at trends, and describe limitations in the methodology.

4. Deliver and Report Information. Apply the information to the situation. This includes using appropriate delivery technology to make the restructured facts available for further restructuring. Interpret instances where there may be differences or gaps between the collection of the data and the current need to make decisions. Identify systemic sources of bias. Focus reporting on the specific alternatives and support interpretations of causality and desirability of outcomes.

5. Use and Influence Knowledge. Use facts to clarify the situation, to make a decision, or to advocate a belief or value. Identify the way the new knowledge expands the previous understanding. Determine the changes in the environment and what is assumed about the situation. Consider the importance of new information relative to the issues incorporated in the previous conceptual model.

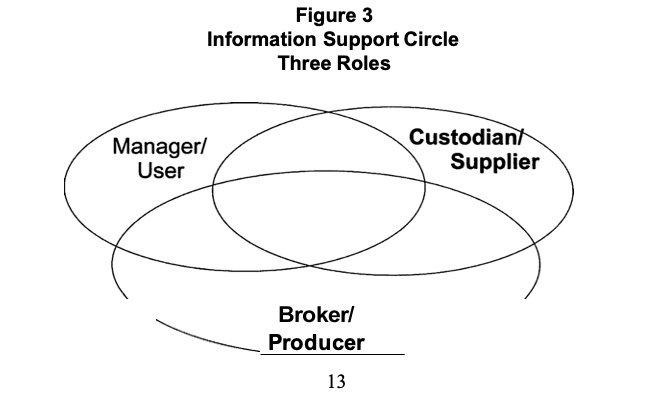

Three Key Roles

The roles explain the way the functions, described above, are related to institutional management. These roles may be thought of as clusters of responsibilities, tasks, and activities that can be assigned. The roles bridge the separate tasks of the functions into sets of authorities, responsibilities, and abilities (Figure 3). The following are

brief descriptions of the three roles in the paradigm. More complete descriptions are provided in Chapters 4, 5, and 6.

1. Custodian. The custodian focuses on the integrity of the data and helps select appropriate data for the analysis. The custodian adds value by contributing operational knowledge. The custodian needs data management and administration skills. The custodian also influences the selection of the methodologies and the development of the essential questions which identify the information need.

2. Broker. The broker works to transform the data into information. This involves integrating data from various sources, restructuring data to focus on the areas of concern, and analyzing the data to look for causality, desirability of outcomes, and parsimony of elements. The broker participates and consults in the selection of the methodologies and the development of the essential questions for decision making.

3. Manager. The manager takes the information and applies it to the situation. In this manner, the information becomes part of the intelligence of the situation and reduces the uncertainty of the situation. The manager is often the decision maker but may also be someone responsible for supporting the decision process.

Some of the decisions are external, such as identifying what product to buy. Other decisions are intermediate and internal, such as evaluating business processes. The manager has a primary responsibility to identify the important elements of the problem, and also the unknowns.

Two Major Properties

Two properties provide a mechanism for evaluating the functions and the roles. These are the core values of the model, governing the essential actions: DO THIS and GET THAT. We have found it useful to separately conceptualize these as two properties although the two are not mutually exclusive. Dependency is the property which deals with the influence each function has on the others. Cooperation is the property which deals with the relationships between the three roles. These properties are briefly described below and further developed in Chapter 7.

1. Dependency. The five functions form a circle (Figure 2). The value, which can be added for each function, is dependent on the quality of the preceding function. How you do each step defines the quality of every following step. No function can produce quality or add value beyond the quality of the preceding function. For example, if the reliability of the data from the capture and storage step is poor, then no sophistication in methodology or increase in the amount of resources for analysis will overcome

14

the low reliability. If the conceptual model is weak and erroneous content is selected for measurement, the resulting information will be seriously compromised regardless of the resources employed at a later step. The quality of the information produced is only as good as the quality of the weakest link in the circle of functions.

2. Cooperation. The quality of the information you get depends on the mutual involvement in key activities. The ability to provide quality management comes from mutual investment and interaction of the three roles (Figure 3). If the individual filling any one of the roles decides to act only in his/her own best interest, the integrity of the information support process is negatively impacted. In a self-contained operational scenario, the data custodian may change the definition of a variable to support a new operational need and inadvertently compromise the intended use in another area. The broker may propose an analysis requiring data that do not exist. The manager may start looking at new problems without notifying either the broker or the custodian.

Cooperative interaction among the three roles permits identification of opportunities to solve specific problems while positioning the data structure to support future needs. It allows the use of analytical procedures (1) that can be focused on identified needs and (2) which are sufficient for the integrity of operational data. This synchronization provides the highest quality information to support the best organizational outcomes given the constraints of time and resources.

Changing Users

As institutions respond to change, one likely outcome is downsizing. During downsizing, individuals typically leave organizations based on their readiness to leave, rather than the needs of the organization. Downsizing and rightsizing typically occur in the middle of the organization, the domain of staff specialists and middle managers. Further, reorganizing and restructuring often result in nontraditional management structures such as matrix reporting, task forces, or cross-functional teams. All of this requires individuals to develop a broader skill set. "Empowered" individuals need to be able to solve problems and recognize when they need to involve others in the problem solving.

The manager of tomorrow needs a broader and more flexible range of skills. This monograph helps meet this need in two key ways. First, a set of categories in which skills may be necessary are identified. Second, it shows related areas and types of activities that require cooperative work.

Each data management role encompasses a set of skills and expertise that can be brought to bear on any situation. The custodian supports the functional area. This requires traditional business skills such as accounting, personnel, and finance. The broker role requires

15

experience with methodologies including management science, statistics, decision science, and computer science. These are the methodologies which facilitate the translation of data from the functional areas into information to be provided to those who apply the information to a specific situation. The manager role requires an understanding of decision science, organizational behavior, and knowledge of specific characteristics of the industry. A decision maker in any of these areas needs to understand and appreciate the contribution of the others.

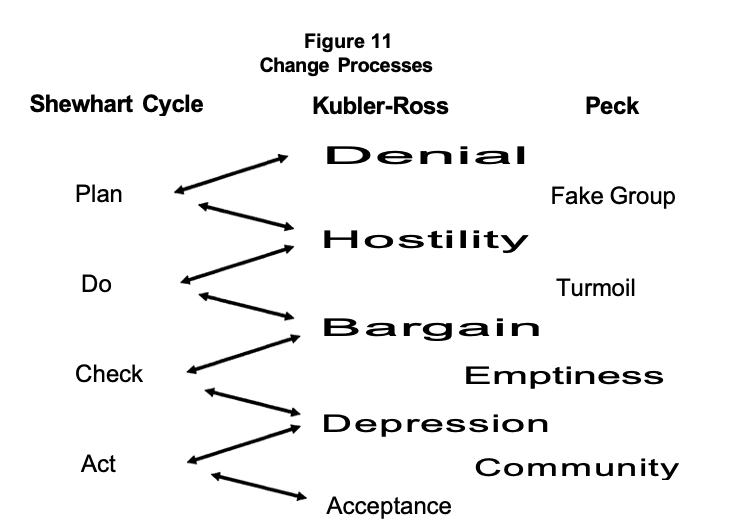

When institutional change becomes an identified need, reactions are often much the same as Kubler-Ross (1974) outlined in an individual's acceptance of death and dying. The first reaction is denial that the change is required. The second reaction is hostility that results from uncertainty and anxiety as individuals admit the rules are changing. This is when cooperative work between units across the organization is most essential to both organizational and individual well-being. At the same time however, cooperation may also be extremely difficult to achieve because of the individuals' insecurities. In this situation, quality information can be leveraged to bring the fearful together and create a frame of reference for ongoing cooperative work. The resulting bargaining makes functional changes possible, although the following depression must be dealt with before acceptance.

Changing Data Support Structures

The data support for new organizations will depend on the development of effective management data. This requires focusing on the decision process and on the uses of the data. Decision makers need an integrated set of data from both internal and external sources, including both current data and point-in-time historical longitudinal data. Because users operate in a variety of technological environments, the support structure must adapt with new software tools, expanded skill sets and training, and a new appreciation for the final product-management information.

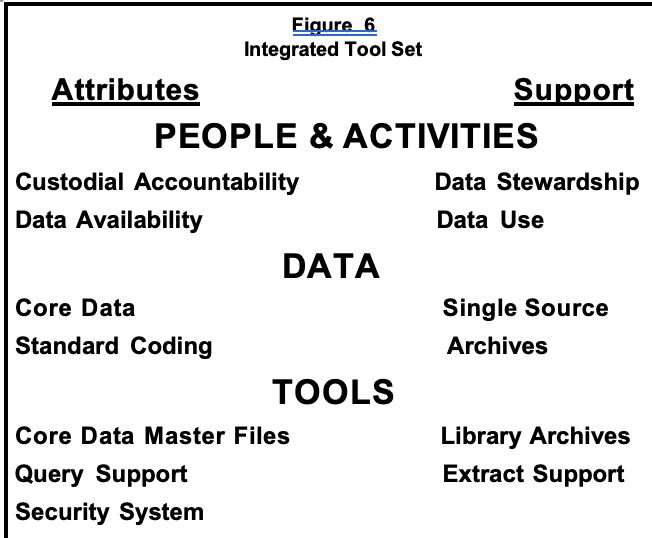

In order to create a viable data support structure, the following will have to be developed and/or implemented at the institution:

Data Requirements

• Standardized and integrated current data: selected data, defined and restructured to reflect the business needs as viewed by decision makers

• Standardized and integrated historical data: selected data, defined and restructured to reflect changes, which are comparable with other institutions and useable in trend analysis

Software Tool Requirements

• Inter-connectivity tools: software to extract, transform, and integrate the separate databases, which support multiple types of networks, and are easy to use

• Relational and analysis tools: software which creates and loads

16

data structures and which supports statistical analysis of the data, tabular and graphical displays, and which answers "what if' business intelligence analyses.

• Security tools that are designed for a networked sub environment: software that limits data access to sets of measures and groups of people, and prevents disruptions of service.

Skills and Training Requirements

• Skills: to analyze and restructure the data with the appropriate tools

• Training and support: in technology and methodology (including statistical sophistication, Web strategies, data cutting tools, and an awareness of the latest developments for data access)

• Knowledge: to map complex analytical procedures and organizational processes

• Ability: to verify data validity and reliability

The Changing Institutional Research Function

Technical tools are necessary, but not sufficient to provide quality information support. The mission of institutional research is to enhance institutional effectiveness by providing information which supports and strengthens operations management, decision making, and unit and institutional planning processes. The institutional research function is most closely aligned to the information broker role and relates to data in three ways:

User. Institutional research is a user of all the critical and key administrative data elements. As such, it has many of the needs of managers.

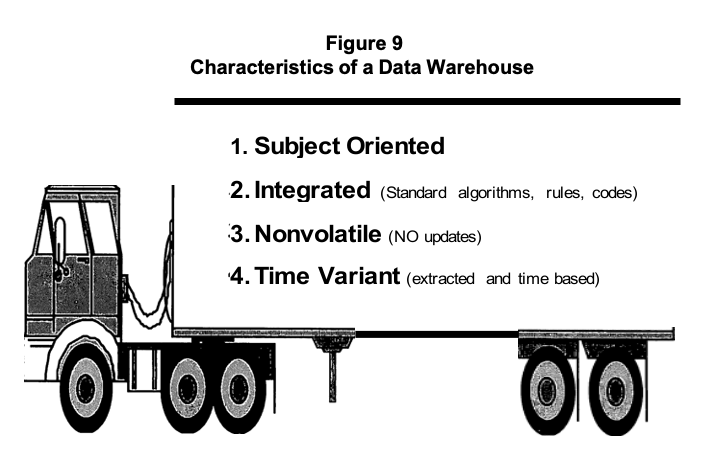

Producer. Institutional research is a producer, dealing with data quality issues and data integration challenges in order to provide internally and externally standardized extracts of time variant data. This can be thought of as populating a data warehouse.

Supplier. Institutional research has an obligation to both its internal and external customers to supply current levels of information and analysis support while creating more effective delivery strategies to address requirements of various users of institutional data. This also sometimes involves us getting into the data storage business with data marts, Operational Data Stores (ODS), and data warehouses.

It is clear the successful institutional researcher is dependent on positive relationships with data suppliers and on the quality of their data.

17

Simply put, institutional research is the basic process of adding value to the information and data available to a manager. Recent workshops conducted on effective institutional research highlighted four goals for effective institutional research related to data. These included providing accurate and timely data, developing a system of data collection, creating usable data, and providing trend data. Not surprisingly, participants identified data problems as the major barrier to their effectiveness in meeting these goals. Specific data problems include:

Data Definitions. Disagreement on definitions; incorrect interpretations; data collected in different forms; and, lack of adequate comprehensive measures.

Technology. Lack of hardware and software resources to maintain, transfer and analyze data; poor data collection tools; lack of data management tools; and, lack of decision support tools.

Data Access. Inaccessible data at both the local and state level and lack of data about the data (where the data are located, how the user obtains access, etc.).

To increase effectiveness, institutional research must support efforts to improve the data quality. There are several reasons for this. As a comprehensive user and customer, institutional research has extensive data-use methodology skills. Further, professionals in institutional research can teach these skills. These skills include using statistical, comparative, projective, and qualitative methodologies. Also, as an office which often coordinates reporting with external agencies, institutional research is heavily involved in negotiating definitions and supporting the institutional reporting and information requirements.

Standardization can enhance the value of state and national databases. When offering support to various internal offices, institutional research can often assist custodian efforts to improve standardization of data and definition of codes. This is also the opportunity to bring the manager together with the data custodian so both can better understand the need to obtain and properly use data.

A View for Adding Value

We have discussed the new management challenge facing our institutions, a sea change which forces changes in the way decisions are made. This impacts how decision makers are supported and increases management's demand for useful data. The institutional research function is positioned to be an effective force in the improvement of the integrity and value of the data and, therefore, is at the root of our institutions' response to change.

There is no best way to improve the management of data. There are, however, some strategies which are likely to be successful. The ideas and thoughts in this monograph are presented to help focus on some of these strategies. The ideas are organized to work through a

18

sequence of looking at the need for improving data management, to describe some of the steps which we and our colleagues can take to overcome the problems in our data, and, finally, to look at what can be done to make things better.

These discussions challenge all of us to deal with three questions, which lead the way to knowledge:

• What do we know?

• What does it mean?

• So what?

In the next chapter, we discuss the five functions of the Information Support Circle and the problems, or "diseases," often associated with each.

CHAPTER3

STEPS IN QUALITY INFORMATION SUPPORT

Strangely enough, it seems that the more information that is made available to us, the Jess well informed we become. Decisions become harder to make and our world appears more confusing than ever. Psychologists refer to this state of affairs as "information overload," a neat clinical phrase behind which sits the Entropy Law. As more and more information is beamed at us, Jess and Jess of it can be absorbed, retained, and exploited. The rest accumulates as dissipated energy or waste. The buildup of this dissipated energy is really just social pollution, and it takes its toll in the increase in mental disorders of all kinds, just as physical waste eats away at our physical well being. The sharp rise in mental illness in this country has paralleled the information revolution. (Rifkin and Howard, p. 170)

Assessing the Information Infrastructure

To avoid information overload, and the attendant mental disorders suggested by Rifkin and Howard, it is helpful to start with a set of beliefs about the order of reality, mental models in Senge's terms. We do this with the five functions of the Information Support Circle. These become a means of structuring the information support process and the process of managing data. Before discussing these five functions in detail, it is helpful to realize that the institutional context in which they exist is unique to each specific institution.

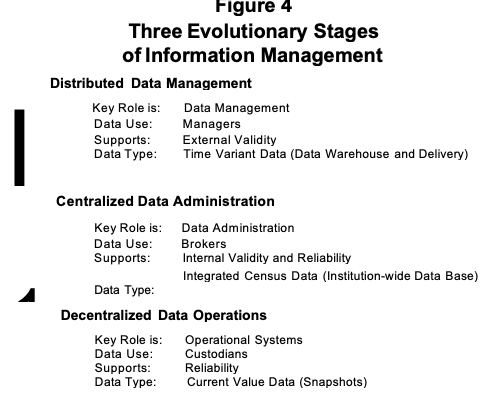

The information architecture of an institution is the predominant style of designing and maintaining the structure of information and can be described in one of three evolutionary stages: decentralized data management, centralized data management, or distributed data management (Figure 4).

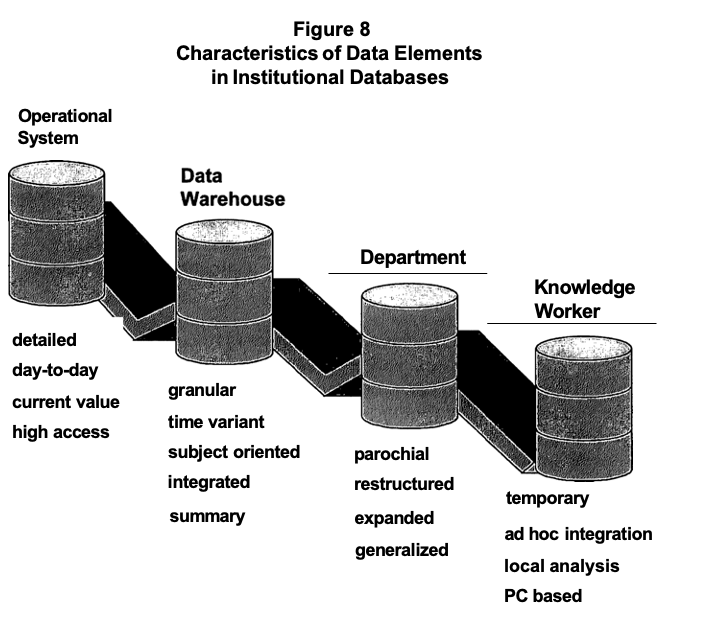

Decentralized data management focuses on data support in the operational systems. These systems and their custodians are the source of institutional data and data definitions. These data primarily are used internally to support

operational processes and decisions. The major issue in a decentralized environment is reliability: are data based on consistent and stable definitions? Are they collected systematically?

Centralized data management focuses on data administration. The primary use of the data is for studies and management information. In addition to meeting operational needs, the data now become an institutional resource, integrated and analyzed for the support of central decision makers. The major concern in this environment is the internal validity of the data. Those who create centralized databases must understand how the data are going to be used so the data can be prepared to meet the decision makers' needs. The source of the data often includes integrated census files taken from the operational systems and placed into a data warehouse.

Distributed data management focuses on the process which controls the flow of the data from source to use. Primary ingredients are the manager and the outcomes of processes or programs. Here, the major concern is the external validity of the data. To what degree can the implications of the data be applied to the current situation? Distributed systems include integrated census data which provides a campus-wide view, including department- or program-level detail.

It is important to recognize and understand an institution's stage in the continuum of information management development. The stage of the data management process should relate to the organization's stance. If the institution is operating in a highly centralized mode, the data management process should be focusing on the internal validity of the data. On the other hand, if the institution wants to provide adequate distributed data to a set of distributed decision makers, then the data management function must support reliable operating systems and an integrated census database before it can deliver data which will be of value to the distributed user. Clearly, the changes discussed in Chapter 2 are rapidly pushing all organizations toward a distributed environment. It must be re-mentioned; however, to operate at that level, the organization must provide adequate data quality at the preceding two levels.

The Role of Data

Creating and using information is the business of institutional research. Information has value when it reduces uncertainty in planning and decision making. The production of information is a cyclical process which includes identifying measures as data elements, capturing and storing appropriate data, analyzing and restructuring the data into information, and distributing and reporting the resulting information. The Information Support Circle (Figure 1) is closed by the user, when feedback is provided by the manager to the broker and the custodian about the usefulness of the information in creating knowledge and well being that add value to the institution.

Data and information have value to the decision maker if uncertainty associated with the decision is reduced. It is almost certain that the more important the situation, the greater the power of the information. In

21

other words, institutional researchers profit by the quality or usefulness of the information we provide the decision maker. More and more, our ideas and organizations will profit or lose by the quality of the information produced and communicated, internally and externally.

The development of quality information is a complicated process.

This process requires that individuals work together with a sense of common purpose, an understanding of the information process, and an awareness of, and ability to use, some basic tools to solve problems.

Below, we describe a process for developing quality information. This is an iterative process requiring cooperative efforts across the campus.

Barriers to Quality Information

As we have seen, five fundamental functions must be adequately performed to generate quality information. In the following discussion, we explain what is supposed to happen in each function. In addition, "diseases" and their symptoms that result in organizational disabilities which inhibit organizational learning are described. These diseases limit the effectiveness of each function in the Information Support Circle and as such the quality of information produced. This "disease" analogy provides a model that researchers can use to evaluate the viability of each function in their decision support efforts. Remember, these are only the principle diseases, there are other related "ailments" too numerous to discuss. Although healthy signs are no guarantee of a healthy situation for a function, their absence definitely indicates a problem. We encourage you to complete the checklist in Appendix A. The weakest link in the Information Support Circle can be diagnosed readily from this checklist. We recommend an examination of the function with the lowest score as this function is the most debilitating. Be aware however that while this particular function may have the most visible set of problems, it may not be the underlying or root cause of poor data and information at your institution.

Function: Identify and Measure Concepts

The first step in the creation of decision support information is to identify and measure the appropriate concepts. The focus must be: What information do we really need to know? and, What are the essential elements of this information? Often this will start with the decisions that need to be made. Specifically, the requirement at this step is to identify facts, both qualitative and quantitative, which are needed by the decision maker to both make and communicate the decision(s). The creation of quality information must be based on a conceptual model. This model includes not only the decision being made, but also the context in which it is made, and an understanding of the constraints and the consequences of the decision. The selection of the concept(s) to measure must consider the key types of performance: effectiveness, efficiency, timeliness, and reliability. The items identified and the metrics developed must be sufficient, reliable, and reasonably easy to create. The context should focus on the ends, rather than the means. Once the process is implemented, selected measurements should not disrupt the process.

22

Disease: "Belief Bulimia." The semi-random gorging and purging of data without identification of the concepts that are to be measured.

This prevents the proper development of a model and.the measurement of appropriate variables. Here, there is no consistent focus by the decision maker, so there is no way to determine a specific area of interest or concern. After the objectives of an activity are established, there also may be a gap in the communication of those objectives to staff and analysts who are working to collect the data and create the information.

Some goals and objectives may not be measurable using available data sources. There is no shared conceptual model of the situation and, therefore, no way to evaluate the usefulness of the data for decision support. The primary symptom of "Belief Bulimia" includes random interaction between managers, brokers, and custodians, which results in "knee-jerk" inclusion of data to address specific purposes until the next crisis and rapid retro-grade studies.

Function: Collect and Store Data

After required facts are defined and operational measures are identified, the corresponding data need to be collected and stored by a unit or units within the organization. Computer-based data will be more accurate when it is collected at a single source where data first come into contact with the organization. External information should be captured in a systematic fashion using documented procedures such as environmental scanning. All coding must be done in a stable and consistent fashion. Storage requires the systematic development and use of a database management system. In addition, there must be documentation about the data which is stored in the system. A data element dictionary and set of descriptions are essential components of proper coding and storage of data. Meta-data should be identified and made available to the users. Time-date data should show "as-of' status of attributes.

Similar measures should yield similar results. The data must be captured with the same set of categories and codes regardless of where they are collected, when they are accessed, or who is responsible for the data collection. Finally, the data must be internally consistent when cross checking codes. For example, an active address should only exist in the record of someone who is living. Census-date data marts and similar frozen data sets are very valuable for producing stable and consistent data.

Disease: "Data Dyslexia." This occurs when there is an inability to recognize data, often confusing one data element for another. Data coding often relies on an individual's memory, or is recorded on post-it notes, or the backs of envelopes, resulting in confusion about what is stored, where it is stored, when it was stored, and why it was stored.

Concerns are often met with a "we-have-always-done-it-this-way" statement. The same variable, for example "student," will have different meanings in different tables in the data structure. The lack of a strong institutional commitment to the information support function results in the

23

lack of policies and procedures. Resources are not allocated to ensure that data collection processes are documented and coding standards are in place. Traditional compartmentalization of units within the institution tends to reinforce activities in one department that may be inconsistent with the capture of data to support other departments. This environment is characterized by a lack of consistent technology and standards for the development of the data dictionary or other documentation. This includes non-compatible machines and multiple capture points for a given data element. All of this gives rise to creative data elements based on unwritten rules. One variable is used for another purpose "until later." For example, one institution coded people handling hazardous materials as buildings so they would show up on an annual list requiring maintenance to show when the people needed annual physicals. A readily recognized symptom is found in the statement: "This is only temporary...we are getting a new system to solve that problem." Also, a symptom that "data dyslexia" on your campus is when business rules used to create derived elements cannot be produced in writing.

Function: Restructure and Analyze Facts

Here, data that were originally structured to support operational transactions are restructured into formats that support reporting and the decision support process. Data should be integrated, summarized, and analyzed so that interpretation and inferences of causality can be made. Data reduction can be accomplished by creating subgroups, combining variables, summarizing detail, and identifying trends.

Data are normally grouped by institutional entities for which the data are attributes. Student transactions are stored in the student database, faculty data are stored in the faculty database, and so on. These data must be restructured and integrated to address organizational issues, and can require combining existing variables and creating new variables.

Business rules come into play to integrate and edit the data. Adequate tools should be involved in transforming the data but tools do not replace the need for human thought. In addition, analyses may be required to synthesize events where the summary of an analysis becomes a new variable. Combining appropriate data can form metrics and indices. For example, based on a series of decision rules an employee may be classified as faculty. This handling and restructuring of data may involve merging qualitative and quantitative data. Properly done, restructuring and converting the data into the users' frame of reference results in the reduction of the complexity of the data without major loss of relevant detail. This is the intent of methodologies such as performance indicators. The method for recoding or recreating a variable is also needed in the meta-data so that an audit can check the validity of the variable.

The analysis phase of information support reduces the amount of data to a level which can reasonably be comprehended by the decision maker while retaining the primary facts portrayed in the data. This step is critical to establish the causality in producing the outcomes being

24

considered. It is also critical in the stream of evidence that leads to the decision of preferences. This phase integrates multiple sets of facts. The process is rational and sequential, taking into account the intended use.

Disease: "Dimensional Dementia." This disease strikes when there is a lack of agreement on the frame of reference for analysis and interpretation. Should the analysis be concerned with one-year results or five-year results? How much data should be summarized? Interpretation is independent of the context in which data were collected.

Summarization occurs over variables which have no logical relationship. Analysts use the most impressive statistics available. Data are segmented into groups without an understanding of the rationale for segmentation. Uninterpretable statistics are the result of irrational data groupings. Those who suffer from "Dimensional Dementia" forget why the analysis is being done and data are grouped incorrectly. No one remembers how the data were recorded or what the resulting metrics mean.

Function: Deliver and Report Information

Delivery is the process of placing the restructured and often analyzed data and "cleansed" information in a location where the manager has access, like a data warehouse or Web site. Reporting is the advanced process of interpreting the information in context. Starting with the executive summary and continuing through the facts and figures, delivery and reporting should focus on the specific need of the users.

The broker needs to provide support so that the manager can generalize the results of the data and information for various desired uses. The reporting should be structured so the manager can check whether the results and data apply to current and future situations. Reporting should allow the manager to determine how to use the results in a valid manner. To what future situations will the results apply? There should be the opportunity to integrate qualitative as well as quantitative data. Reporting should involve some basic consideration of outcome preference and causality.